Face tracking, 3D- Avatar algorithmic performance, video, interactive installation

Face tracking, 3D- Avatar algorithmic performance, video, interactive installation

3.5m* 5.5m, Säulengang, Kunsthochschule Kassel

4m * 4 m, Examen, Documenta halle, Kassel

3.5m * 3.5 m, CAA art museum, Hangzhou, China

3.5m * 3.5 m, CAA art museum, Hangzhou, China

Full video

Video and installation

I wish to ask the AI and machine:

- Could the automatic 3D modeling software generate unbiased human avatar?

- Can you recognize the Asian eyes as open?

- Do you believe that all Asians look alike?

- If I were a black girl, should I be labelled as a gorilla?

- If someday I were disfigured, could you still recognize me?

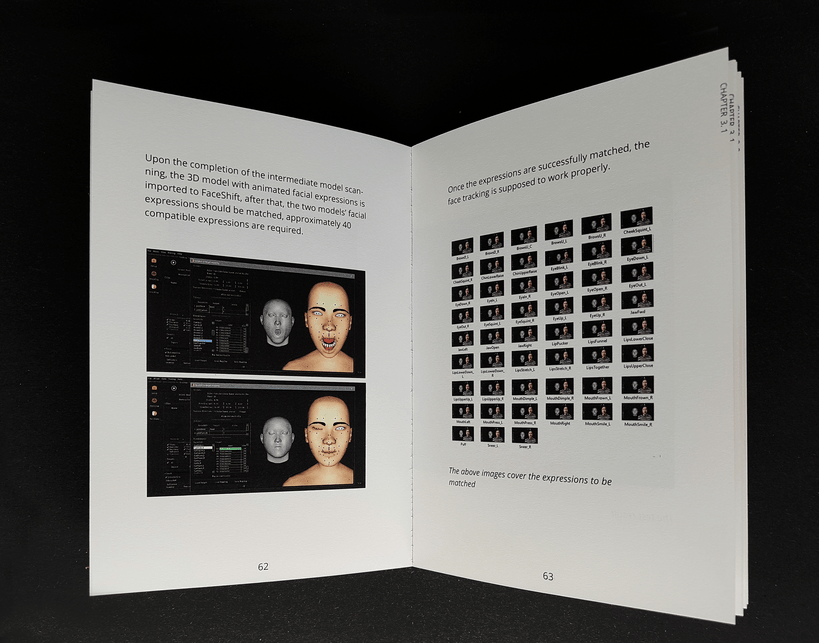

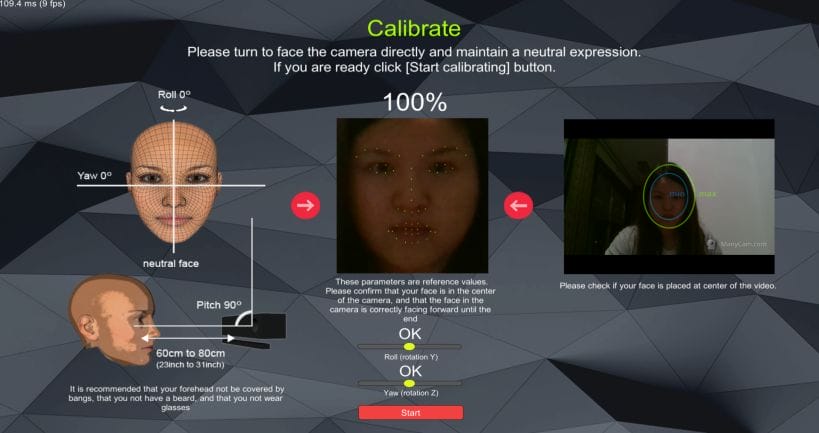

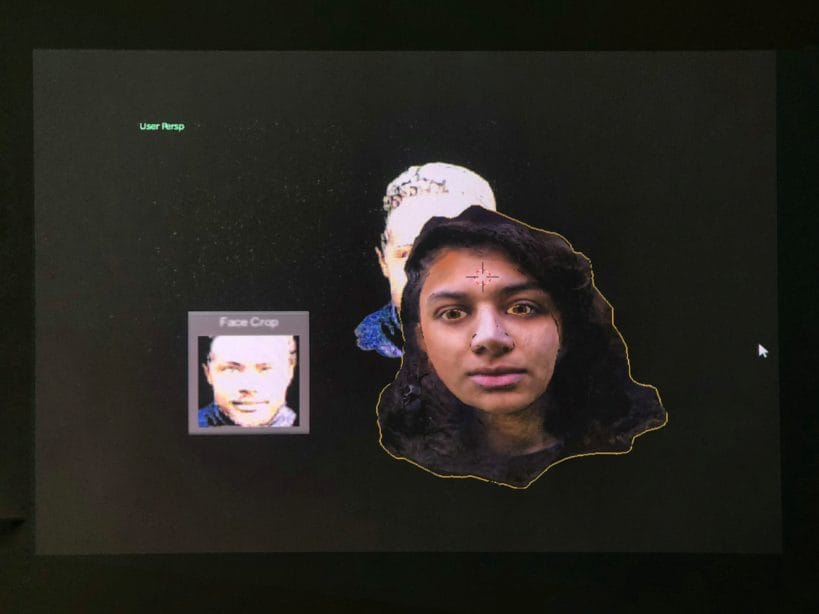

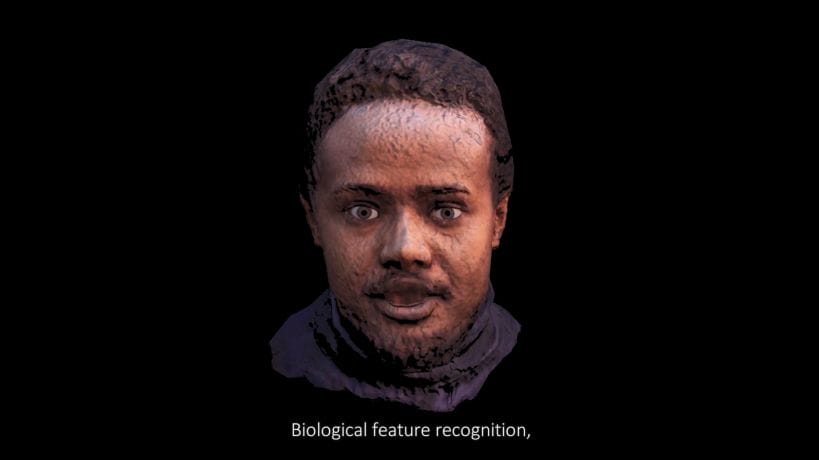

Facial tracking software is inherently biased towards Caucasian bone structures, skin color and facial contouring. This means that users from other racial backgrounds experience difficulties, both when tracking the face or during the creative process of 3D modelling.

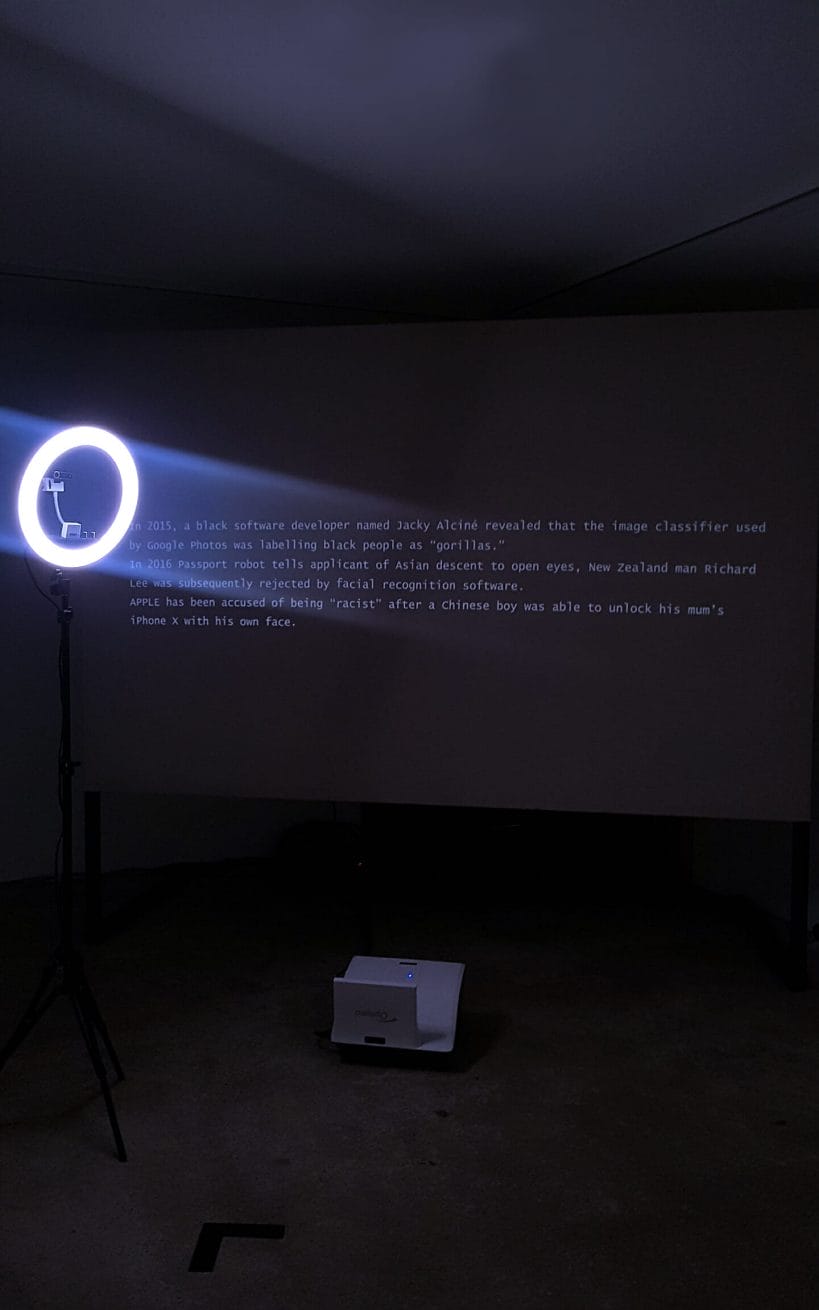

In 2015, a black software developer named Jacky Alciné revealed that the image classifier used by Google Photos was labelling black people as “gorillas.” In 2016 Passport robot tells applicant of Asian descent to open eyes, New Zealand man Richard Lee was subsequently rejected by facial recognition software. APPLE has been accused of being “racist” after a Chinese boy was able to unlock his mum’s iPhone X with his own face.

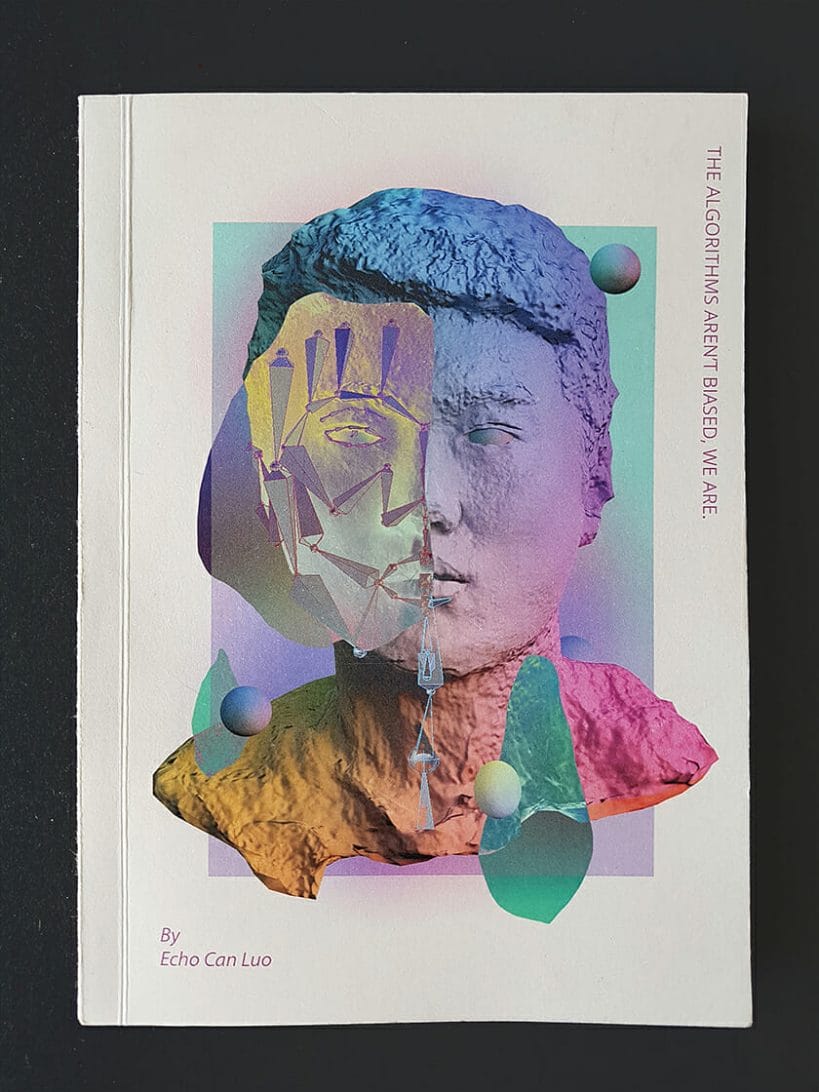

“Nicely nicely all the time!”, a 3D interactive installation by Echo Can Luo originally set out to tell the story of minorities in Germany. But early during the process, Luo realized it was impossible to use generative 3D software to model non Caucasian faces. As a result the project includes a survey of 3D modelling software and their inherent bias and finally a video that tells the story of immigrants giving up their (biometric) data.

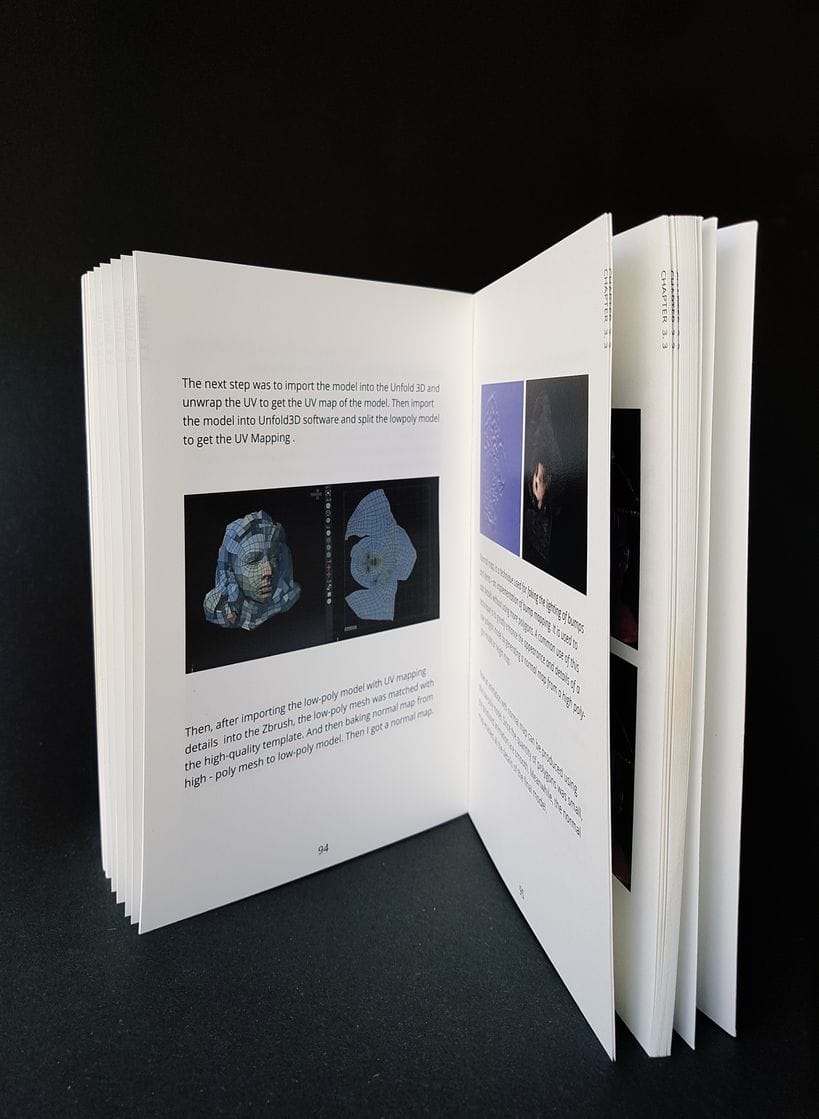

In the majority of the programs she use, the boning, skin, texture, hair and height of the body are all designed to represent Caucasian body types. As a result, it is extremely difficult and demanding to create an Asian, African or brown-skinned avatar. As the Caucasian 3D avatar is the base of all possible models, she had to make the choice to either go through the labour intensive process to modify this base so it would no longer show, make a compromise. This is the only way to cohesively match the two races as a whole, meanwhile.

When the project further developed, she realized that the tools and software she used were equipped with partial biases. One could argue that this is unavoidable at the beginning of a new technology. One may overlook such minor issues or notify the artist that it only takes a little more time and energy to create a non-Caucasian 3D avatar. As she noticed the issue, however, she realized that the computational and operational errors were caused by human beings, to be specific, they result from the favoritism by those who collect the machine statistics and data. If the creators realized that the language within the program should be equally impartial to all of us, the biases and discrimination may not appear.

A lot of automated 3D modeling software has a certain degree of racial bias. Although the human face has the same structure, but the difference is to be found in the details. If these differences are ignored from the software and all Avatars are modeled, it is discrimination and prejudice.

4m * 4 m, Examen, Documenta halle, Kassel

3.5m* 5.5m, interactive installation, Säulengang, Kunsthochschule Kassel

3.5m * 3.5 m, CAA art museum, Hangzhou, China

5.5m* 5.5m, interactive installation, Rundgang, Kunsthochschule Kassel

Video screenshot

Video screenshot

Click to enter my research on Face- tracking and 3D modeling Bias

THE ALGORITHMS AREN’T BIASED, WE ARE. — Thesis of “Nicley nicley all the time!”